In AI We Trust – Or Should We?

A practical guide for small and mid-sized businesses

It’s been about three years since ChatGPT and its friends burst into our lives. In that short span, we’ve gone from “Wow, this is magic!” to “Hmm, is this safe?” and finally to “Is this thing planning to replace me?”

AI hasn’t just disrupted business processes. It has poked at our social fabric too. And like any disruptive house guest, it arrived with excitement, stayed longer than expected, and left us with a mild headache.

Most articles on AI follow a familiar script. They open with bold promises of productivity and innovation, and then quietly end with a warning that reads like the small print on a medicine label:

“May produce inaccurate results. Consult your human before acting.”

Not exactly confidence-building.

So the real question isn’t whether AI is impressive. It clearly is.

The real question is: can we trust it?

Why trusting AI feels uncomfortable

The problem isn’t that AI is mischievous or deliberately misleading. The issue is more subtle. AI doesn’t always know what it doesn’t know. And when it guesses, it often does so with the confidence of a seasoned politician. That’s where scepticism creeps in.

Today, most businesses trust AI for what I call the “safe stuff”. Writing emails. Summarising long documents. Analysing structured data. Generating images. Answering questions from well-curated internal or public sources.

And that’s perfectly reasonable. These are areas where AI performs consistently well. There is also an explosion of niche tools for image, audio, and video enhancement. Some are genuinely impressive. Others are… enthusiastic attempts. Let’s file those under “nice try”.

The anxiety rises when AI moves into more serious territory. Medical diagnostics. Document intelligence. Automated decision-making. These fields show promise, but concerns around accuracy, bias, hallucinations, and transparency still make people uneasy. No hospital wants an AI that confidently concludes an X-ray looks like a chicken wing.

So, can AI replace humans today?

The short answer is no.

The slightly longer answer is not yet, and that’s actually a good thing.

AI is excellent at patterns. Humans are excellent at context, judgement, and consequence. Expecting AI to replace humans is like asking Excel to run a board meeting. Powerful tool. Wrong job.

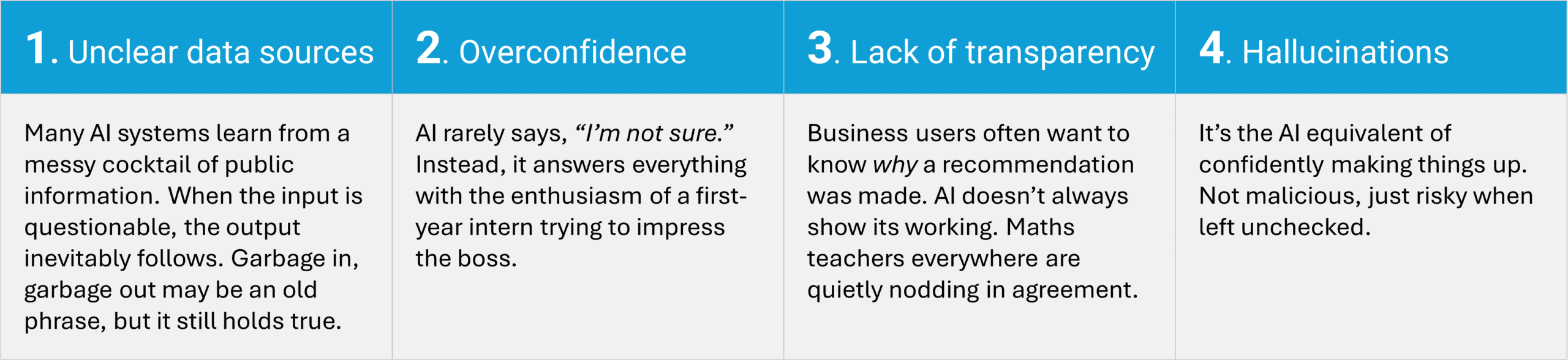

Where the trust gap really comes from

If we peel back the layers, the trust gap usually comes down to a few recurring themes.

So how do we build trust in AI? There is no single switch

One of the biggest misconceptions about AI is that trust can be “turned on” by choosing the right tool. In reality, trust is not a feature. It is the outcome of good design, good data, and good discipline.

Think of it like building a bridge. You don’t rely on one pillar. You use several, each reinforcing the other.

Here are the practical pillars that work especially well for small and mid-sized businesses.

1. Start with better data, not bigger AI

AI is only as reliable as the information it learns from and refers to. Many trust issues stem from vague or unreliable data sources.

This is where approaches like Retrieval-Augmented Generation (RAG) become valuable. RAG allows AI to ground its answers in your own internal documents – approved policies, product information, SOPs, contracts, and knowledge articles.

When AI is forced to “look things up” before answering, it behaves less like a guesser and more like a reference assistant.

For SMEs, this is powerful because you don’t need a large knowledge-management team. Your existing documents, when organised sensibly, become the foundation for trustworthy AI.

But it’s important to be clear: RAG improves accuracy, it doesn’t eliminate responsibility. It works best when combined with other controls.

2. Use the right-sized models for the job

Not every problem needs a massive general-purpose AI model. In many cases, smaller, focused models trained for specific industries or functions perform better and are easier to control.

These small language models are faster, cheaper, and easier to explain to business stakeholders. More importantly, they reduce the risk of unexpected behaviour.

In simple terms, it’s better to use a calculator designed for accounting than a supercomputer that also knows poetry.

3. Design clear boundaries for what AI can and cannot do

Trust improves dramatically when AI operates within clearly defined limits.

Before deploying any AI solution, businesses should be able to answer a few basic questions:

- What types of decisions can AI support?

- Where must a human always approve?

- What data sources are allowed?

- What level of accuracy is acceptable?

These boundaries turn AI from an unpredictable black box into a governed business tool.

4. Treat AI like a business system, not a magic box

Reliable systems have expectations. So should AI.

Many organisations now define service levels for AI just as they do for software or vendors. This includes response time, update frequency, accuracy targets, logging, and privacy safeguards.

These measures don’t slow AI down. They make it predictable. And predictability is the foundation of trust.

5. Keep humans firmly in the loop

The most successful AI deployments follow a simple rule: AI assists, humans decide.

AI can draft content, analyse data, highlight risks, and suggest options. Humans apply judgement, context, ethics, and accountability.

This approach not only reduces risk, it also reassures employees that AI is a partner, not a replacement.

6. Build understanding before scaling usage

Many AI trust issues are emotional rather than technical. People fear what they don’t understand.

A short, practical session explaining what AI does well, where it struggles, and how to use it safely can dramatically reduce resistance. When employees understand limitations, they become better supervisors of AI output.

7. Be open with customers and stakeholders

If AI is part of your product or process, transparency builds confidence.

Explaining how AI is used, what safeguards exist, and where humans remain involved reassures customers that AI is enhancing quality, not cutting corners.

Conclusion: Trust Is Built, Not Assumed

AI is already part of business. The real choice is whether we use it thoughtfully or let it run unchecked. AI doesn’t need blind trust. It needs informed trust.

When grounded in the right data, guided by clear rules, and supported by human judgement, AI becomes a quiet force multiplier. It won’t replace people, but it will reward businesses that learn how to work with it.